Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Load Network Releases: Understanding Our Testnets

Designed for user/dev exploration and testing.

Features low frequency of breaking changes.

Provides a more reliable environment for developers and users to interact with Load Network.

Acts as a testing ground for the Alphanets.

Characterized by frequent breaking changes and potential instability.

Playground for testing new features, EIPs, and experimental concepts.

Defining Load Network

Load Network is a high performance blockchain for onchain data storage - cheaply and verifiably store and access any data .

As a high-performance data-centric EVM network, Load Network maximizes scale and transparency for L1s, L2s and data-intensive dApps. Load Network is dedicated to solving the problem of onchain data storage. Load Network offloads storage to Arweave, and achieve high performance computation -decoupled from the EVM L1 itself- by utilizing ao-hyperbeam custom devices, giving any other chain a way to easily plug in a robust permanent storage layer powered by a hyperscalable network of EVM nodes with bleeding edge throughput capacity.

Before March 2025, Load Network (abbreviations: LOAD or LN) was named WeaveVM Network (WVM). All existing references to WeaveVM (naming, links, etc.) in the documentation should be treated as Load Network.

Load Network is a high performance blockchain for data storage - cheaply and verifiably store, access, and compute with any data.

Exploring Load Network key features

Let's explore the key features of Load Network:

Load Network achieves enterprise-like performance by limiting block production to beefy hardware nodes while maintaining trustless and decentralized block validation.

What this means, is that anyone with a sufficient amount of $AO tokens (read more about $AO security below in this page) meeting the PoS staking threshold, plus the necessary hardware and internet connectivity (super-node, enterprise hardware), can run a node. This approach is inspired by Vitalik Buterin's work in "The Endgame" post.

Block production is centralized, block validation is trustless and highly decentralized, and censorship is still prevented.

These "super nodes" producing Load Network blocks result in a high-performance EVM network.

Raising the gas limit increases the block size and operations per block, affecting both History growth and State growth (mainly relevant for our point here).

Load Network Alphanet raises the gas limit to 500M gas (doing 500 mg/s), and lowers the gas per non-zero byte to 8. These changes have resulted in a larger max theoretical block size of 62 MB, and consequently, the network data throughput is ~62 MBps.

This high data throughput can be handled thanks to block production by super nodes and hardware acceleration.

Up until now, there's been no real-world, scalable DA (altDA) layer ready to handle high data throughput with permanent storage. Load Alphanet reaches a maximum throughput of 62 MBps, with a projection of 125 MBps in mainnet.

Building on HyperBEAM and ao network enables us to package modules of the EVM node stack as HyperBEAM NIF (Native Implemented Function) devices.

This horizontally scalable and parallel architecture allows Load Network EVM nodes to be modularly composable in a totally new paradigm. For example, a node run by Alice might not implement the JSON-RPC component but can pay fees (compute paid in $AO) for its usage from Bob, who has this missing EVM component.

With this model, we will be achieving ao network synergy and interoperability. To read more about the rationale behind this, check the "" blog post

Load Network uses a set of Reth execution extensions (ExExes) to serialize each block in Borsh, then compress it in Brotli before sending it to Arweave. These computations ensure a cost-efficient, permanent history backup on Arweave. This feature is crucial for other L1s/L2s using Load Network for data settlement, aka LOADing [ ^^].

In the , we show the difference between various compression algorithms applied to Borsh-serialized empty block (zero transactions) and JSON-serialized empty block. Borsh serialization combined with Brotli compression gives us the most efficient compression ratio in the data serialization-compression process.

At the time of writing, and since the data protocol's inception, Load Network Arweave ExEx is the largest data protocol on top of Arweave in terms of the number of settled dataitems.

The Load Network interface with Arweave with more than just block data settling. We have developed the first precompiles that achieve a native bidirectional data pipeline with the Arweave network. In other words, with these precompiles (currently supported by Load Network testnet), you can read data from Arweave and send data to Arweave trustlessly and natively from a Solidity smart contract, creating the first ever programmable scalable EVM data, backed with Arweave permanence.

Load's hyper computation, supercharged hardware, and interface with Arweave results in significantly cheaper data settlement costs on Load Network, which include the Arweave fees to cover the archiving costs. .

Even compared to temporary blob-based solutions, Load Network still offers a significantly cheaper permanent data solution (calldata).

The Load Network is the first EVM L1 to leverage Arweave storage and interoperability natively inside the EVM, and obviously, the first EVM L1 to adopt the modular evm node components paradigm powered by HyperBEAM devices.

To align this alien tech stack, we needed an alien security model. On Load Network, users will pay gas in Load's native gas token, $LOAD. On the node operator side, a node that is not running the full stack of EVM components, is required to buy compute from other nodes offering the missing component by paying $AO.

Additionally, as Load is built on top of HyperBEAM (ao network) and Arweave, it's logical to inherit the network security of AO and reinforce Load's self-DA security. For these reasons, Load node operators stake $AO in order to join the EVM L1 block production.

A list of Load Network Alphanet Releases

The table below does not include the list of minor releases between major Alphanet releases. For the full changelogs and releases, check them out here: https://github.com/weaveVM/wvm-reth/releases

v1

v2

v3

Get set up with the onchain data center

Let's make it easy to get going with Load Network. In this doc, we'll go through the simplest ways to use Load across the most common use cases:

v4 (LOAD Inception)

v5

The easiest way to interface with Load Network storage capabilities is through the cloud web app: cloud.load.network, Load Cloud Platform. The LCP platform is powered by HyperBEAM's LS3 storage layer and offer programmatic access through load_acc API keys.

The best data pipeline for massive uploads is to use the Load [email protected] HyperBEAM powered storage layer. The LS3 storage layer, run through a special set of HyperBEAM nodes, have the S3 objects serialized as ANS-104 DataItems by default, maintaining provenance and integrity when the uploader wishes to move the S3 object from the temporal storage layer to Arweave in a single HTTP API request.

To load huge amount of data to Load Network EVM L1 without being tied to the technical network limitations (tx size, block size, network throughput), you can use the load0 bundling service. It's a straightforward REST-based bundling service that let you upload data and retrieve it instantly, at scale:

For more examples, check out the load0 documentation

One of the coolest features offered by the LS3 layer's compatibility with the ANS-104 data standard is the out-of-the-box compatibility with the Arweave ecosystem despite being an offchain temporary storage layer. For that reason, we have built an ANS-104 upload service on top of LS3, compatible with ARIO's Turbo standard, meaning you can use the Turbo official SDK along with Load's custom upload service endpoint to store temporary Arweave dataitems. check out how to use it and learn more about xANS-104 data provenance, lineage and governance here.

Chains like Avalanche, Metis and RSS3 use Load Network as a decentralized archive node. This works by feeding all new and historical blocks to an archiving service you can run yourself, pointed to your network's RPC.

As well as storing all real-time and historical data, Load Network can be used to reconstruct full chain state, effectively replicating exactly what archive nodes do, but with a decentralized storage layer underneath. Read here to learn how.

With 125mb/s data throughput and long-term data guarantees, Load Network can handle DA for every known L2, with 99.8% room to spare.

Right now there are 4 ways you can integrate Load Network for DA:

DIY docs are a work in progress, but the commit to add support for Load Network in Dymension can be used as a guide to implement Load DA elsewhere.

If your data is already on another storage layer like IPFS, Filecoin, Swarm or AWS S3, you can use specialized importer tools to migrate.

The HyperBEAM Load S3 node provides a 1:1 compatible development interface for applications using AWS S3 for storage, keeping method names and parameters in tact so the only change should be one line: the endpoint.

The load-lassie import tool is the recommended way to easily migrate data stored via Filecoin.

Just provide the CID you want to import to the API, e.g.:

https://lassie.load.rs/import/<CID>

The importer is also self-hostable and further documented here.

Switching from Swarm to Load is as simple as changing the gateway you already use to resolve content from Swarm.

before: https://api.gateway.ethswarm.org/bzz/<hash>

after: https://swarm.load.rs/bzz/<hash>

The first time Load's Swarm gateway sees a new hash, it uploads it to Load Network and serves it directly for subsequent calls. This effectively makes your Swarm data permanent on Load while maintaining the same hash.

About Load Network Native JSON-RPC methods

eth_getArweaveStorageProof JSON-RPC methodThis JSON-RPC method lets you retrieve the Arweave storage proof for a given Load Network block number

curl -X POST https://alphanet.load.network \

-H "Content-Type: application/json" \

--data '{

"jsonrpc":"2.0",

"method":"eth_getArweaveStorageProof",

"params":["8038800"],

"id":1

}'eth_getWvmTransactionByTag JSON-RPC methodFor Load Network L1 tagged transactions, the eth_getWvmTransactionByTag lets you retrieve a transaction hash for a given name-value tag pair.

ELI5 Load Network

Load is a high-performance blockchain built towards the goal of solving the EVM storage dilemma with and ao . It gives the coming generation of high-performance chains a place to settle and store onchain data, without worrying about cost, availability, or permanence.

Load Network offers scalable and cost-effective permanent storage by using Arweave as a decentralized hard drive, both at the node and smart contract layer, HyperBEAM as modular stack of EVM node components, and ao network for compute and network security. This makes it possible to store large data sets and run web2-like applications without incurring EVM storage fees.

curl -X POST "https://load0.network/upload" \

--data-binary "@./video.mp4" \

-H "Content-Type: video/mp4"

curl https://alphanet.load.network \

-X POST \

-H "Content-Type: application/json" \

-d '{

"jsonrpc": "2.0",

"id": 1,

"method": "eth_getWvmTransactionByTag",

"params": [{

"tag": ["name", "value"]

}]

}'Load Network mainnet is being built to be the highest performing EVM blockchain focusing on data storage, having the largest baselayer transaction input size limit (~16MB), the largest ever EVM transaction (~0.5TB 0xbabe transaction), very high network data throughput (multi-gigagas per second), high TPS, decentralization, full data storage stack offering (permanent and temporal), decentralized data gateways and data bundlers.

Load Network achieves high decentralization by using Arweave as decentralized hard drive, HyperBEAM as a compute marketplace of EVM node components, and permissionless block production participation (running a node). Load Network will offer both permanent and temporary data storage while maintaining decentralized and censorship-resistant retrieval & ingress (gateways, bundling services, etc).

Chains like Metis, RSS3 and Dymension use Load Network to permanently store onchain data, acting as a decentralized archival node. If you look at the common problems that are flagged up on L2Beat, a lot of it has to do with centralized sources of truth and data that can’t be independently audited or reconstructed in a case where there’s a failure in the chain. Load adds a layer of protection and transparency to L2s, ruling out some of the failure modes of centralization. Learn more about the wvm-archiver tool here.

Load Network can plug in to a typical EVM L2's stack as a DA layer that's 10-15x cheaper than solutions like Celestia and Avail, and guarantees data permanence on Arweave. LN was built to handle DA for the coming generation of supercharged rollups. With a throughput of ~62MB/s, it could handle DA for every major L2 and still have 99%+ capacity left over.

You can check out the custom DA-ExEx to make use of LOAD-DA in any Reth node in less than 80 LoCs, also the EigenDA-LN Sidecar Server Proxy to use EigenDA's data availability along with Load Network securing its archiving.

Load Network offers scalable and cost-effective storage by using Arweave as a decentralized hard drive, and hyperbeam as a decentralized cloud. This makes it possible to store large data sets and run web2-like applications without incurring EVM storage fees.

We have developed the first-ever Reth precompiles to natively facilitate a bidirectional data pipeline with Arweave from the smart contract API level. Check out the full list of Load precompiled contracts here.

Load Network is an EVM compatible blockchain, therefore, rollups can be deployed on Load the same as rollups on Ethereum. In contrast to Ethereum or other EVM L1s, rollups deployed on top of Load benefit out-of-the-box from the data-centric features provided by Load (for rollup data settlement and DA).

Rollups deployed on Load Network use the native Load gas token (tLOAD on Alphanet), similar to how ETH is used for OP rollups on Ethereum.

For example, we released a technical guide for developers interested in deploying OP-Stack rollups on Load. Check it out here.

Load network is being built with the vision of being the onchain data center. To accomplish this vision, we have started working on several web2 and web3 data pipelines into Load and Arweave, with web2 cloud experience. Start using Load Cloud now!

Load Network Cloud Platform — The UI of the onchain data center

Permacast — A decentralized media platform on Load Network

Tapestry Finance — Uniswap V2 fork

shortcuts.bot — short links for Load Network txids

— subdomain resolver for Load Network content

— Dropbox onchain alternative

— Onchain Instagram

— onchain publishing toolkit

— Tokenize any data on Load Network

— Hyperlane bridge (Load Alphanet <> Ethereum Holesky)

— a club for permanent content preservation.

— deploy a Dymension roll-app using Load DA

Useful Links

Load Network custom HyperBEAM devices

HyperBeam is a client implementation of the AO-Core protocol, written in Erlang. It can be seen as the 'node' software for the decentralized operating system that AO enables; abstracting hardware provisioning and details from the execution of individual programs.

HyperBEAM node operators can offer the services of their machine to others inside the network by electing to execute any number of different devices, charging users for their computation as necessary.

Each HyperBEAM node is configured using the [email protected] device, which provides an interface for specifying the node's hardware, supported devices, metering and payments information, amongst other configuration options. For more details, check out the HyperBEAM codebase:

The repository is our HyperBEAM fork with custom devices such as , , and

Our development motto is driven by the manifesto initiated during Arweave Day Berlin 2025.

Our main hyperbeam development is hosted on

The RISC-V Execution Machine device

The EVM consensus light client

The [email protected] device is an EVM/Ethereum consensus light client built into the HyperBEAM devices stack. With helios, node operators can trustlessly connect to EVM RPCs with a very lightweight, multichain and secure setup, and no historical syncing overhead. With this device, every hyperbeam node can turn into a verifiable EVM RPC endpoint.

Explore compute options available with Load Network

Combining the Load EVM with custom HyperBEAM devices enables a wide range of options, from smart contracts to WASM workers to serverless GPU functions.

In this page we will briefly go over the different options within the extended Load Network ecosystem.

The Load EVM offers native EVM compute with typical smart contracts, blobs and calldata standards that are all 1:1 compatible with Ethereum and other EVM networks. As a specialized storage and DA chain, the Load L1 is optimized for to enable data-intensive dApps. .

Load Network Compatibility with the standards

Load Network EVM is built on top of Reth, making it compatible as a network with existing EVM-based applications. This means you can run your current Ethereum-based projects on LN without significant modifications, leveraging the full potential of the EVM ecosystem.

Load Network EVM doesn't introduce new opcodes or breaking changes to the EVM itself, but it uses ExExes and adds custom precompiles:

The EIP-4844 data agent

The stores Ethereum's blobs temporarily on the , serialized as . Based on need/demand, DataItems can be deterministically pushed to Arweave while maintaining integrity and provenance.

DataItems stored on the [email protected] device can be retrieved from the Hybrid Gateway as if they are Arweave txs:

Retrieve blob versioned hash and the associated ANS-104 dataitem id by versioned hash

gas per non-zero byte: 8

gas limit: 500_000_000

block time: 1s

gas/s: 500 mg/s

data throughput: ~62 MBps

RISC-V custom device source code: https://github.com/loadnetwork/load_hb/tree/main/native/riscv_em_nif

Load Network is a hybrid of the EVM and AO's HyperBEAM. The Load Network team runs HyperBEAM instances (permissionless nodes running the AO compute canonical stack), therefore it's possible to leverage the highly scalable AO compute (processes, hyper-aos, Lua serverless functions) within the Load stack. Connect to a node here.

Additionally, we have developed an EVM compute layer (bytecode calculator) as a HyperBEAM device. Learn more.

More over in HyperBEAM land, the Kernel Execution Machine ([email protected] device) allows for quasi-arbitrary GPU-instructions compute execution for .wgsl functions (shaders, kernels). Get started.

Although this is not compute for the user/consumer level, Load maintains several Reth execution extensions (post block-execution compute logic) that can benefits the Reth and the wider EVM developers set. Learn more.

LN-DA plugin ExEx

This introduces a new DA interface for EVM rollups that doesn't require changes to the sequencer or network architecture. It's easily added to any Reth client with just 80 lines of code by importing the DA ExEx code into the ExExes directory, making integration simple and seamless. Get the code here & installing setup guide here

About Reth Execution Extensions (ExEx)

ExEx is a framework for building performant and complex off-chain infrastructure as post-execution hooks.

Reth ExExes can be used to implement rollups, indexers, MEV bots and more with >10x less code than existing methods. Check out the Reth ExEx announcement by Paradigm https://www.paradigm.xyz/2024/05/reth-exex

In the following pages we will list the ExExes developed and used by Load Network.

Borsh binary serializer ExEx

Borsh stands for Binary Object Representation Serializer for Hashing and is a binary serializer developed by the NEAR team. It is designed for security-critical projects, prioritizing consistency, safety, and speed, and comes with a strict specification.

The ExEx utilizes Borsh to serialize and deserialize block objects, ensuring a bijective mapping between objects and their binary representations. Get the ExEx code

An open source directory of Reth ExExes

ExEx.rs is an open source directory for Reth's ExExes. You can think of it as an "chainlist of ExExes".

We believe that curating ExExes will accelerate their development by making examples and templates easily discoverable. Add you ExEx today!

Explore Load Network developed ExExes

In the following section you will explore the Execution Extensions developed by our team to power WeaveVM

Helios is a trustless, efficient, and portable multichain light client written in Rust.

Helios converts an untrusted centralized RPC endpoint into a safe unmanipulable local RPC for its users. It syncs in seconds, requires no storage, and is lightweight enough to run on mobile devices.

Helios has a small binary size and compiles into WebAssembly. This makes it a perfect target to embed directly inside wallets and dapps.

Check out the official repository here

The [email protected] as per its current implementation, initiates the helios client (and JSON-RPC server) at the start of the hyperbeam node run. The JSON-RPC server is spawned as a separate process running in parallel behind the 8545 port (standard consensus rpc port).

The device supports all of the methods supported by helios. Check the full list here

As this device is supported on the hb.load.rs hyperbeam node, it's explicitly assigned the eth.rpc.rs endpoint for the Ethereum mainnet network.

local

using rpc.rs

device source code: https://github.com/loadnetwork/load_hb/tree/main/native/helios_nif

curl -X GET https://load-blobscan-agent.load.network/v1/blob/$BLOB_VERSIONED_HASHYou can find the proxy server codebase here: https://github.com/weaveVM/wvm-rpc-proxy

You can find the proxy server codebase here: https://github.com/weaveVM/proxy-rpc

curl -X POST -H "Content-Type: application/json" --data '{"jsonrpc":"2.0","method":"eth_blockNumber","params":[],"id":1}' http://127.0.0.1:8545curl -X POST -H "Content-Type: application/json" --data '{"jsonrpc":"2.0","method":"eth_blockNumber","params":[],"id":1}' https://eth.rpc.rscurl -X GET https://load-blobscan-agent.load.network/v1/statscurl -X GET https://load-blobscan-agent.load.network/v1/infogit clone https://github.com/weavevm/wvm-proxy-rpc.git

cd wvm-proxy-rpc

cargo build && cargo shuttle run --port 3000curl -X POST http://localhost:3000 -H "Content-Type: application/json" -d '{"jsonrpc":"2.0","method":"eth_chainId","params":[],"id":1}'git clone https://github.com/weavevm/proxy-rpc.git

cd proxy-rpc

npm install && npm run startcurl -X POST http://localhost:3000 -H "Content-Type: application/json" -d '{"jsonrpc":"2.0","method":"eth_chainId","params":[],"id":1}'Using load:// data retrieval protocol

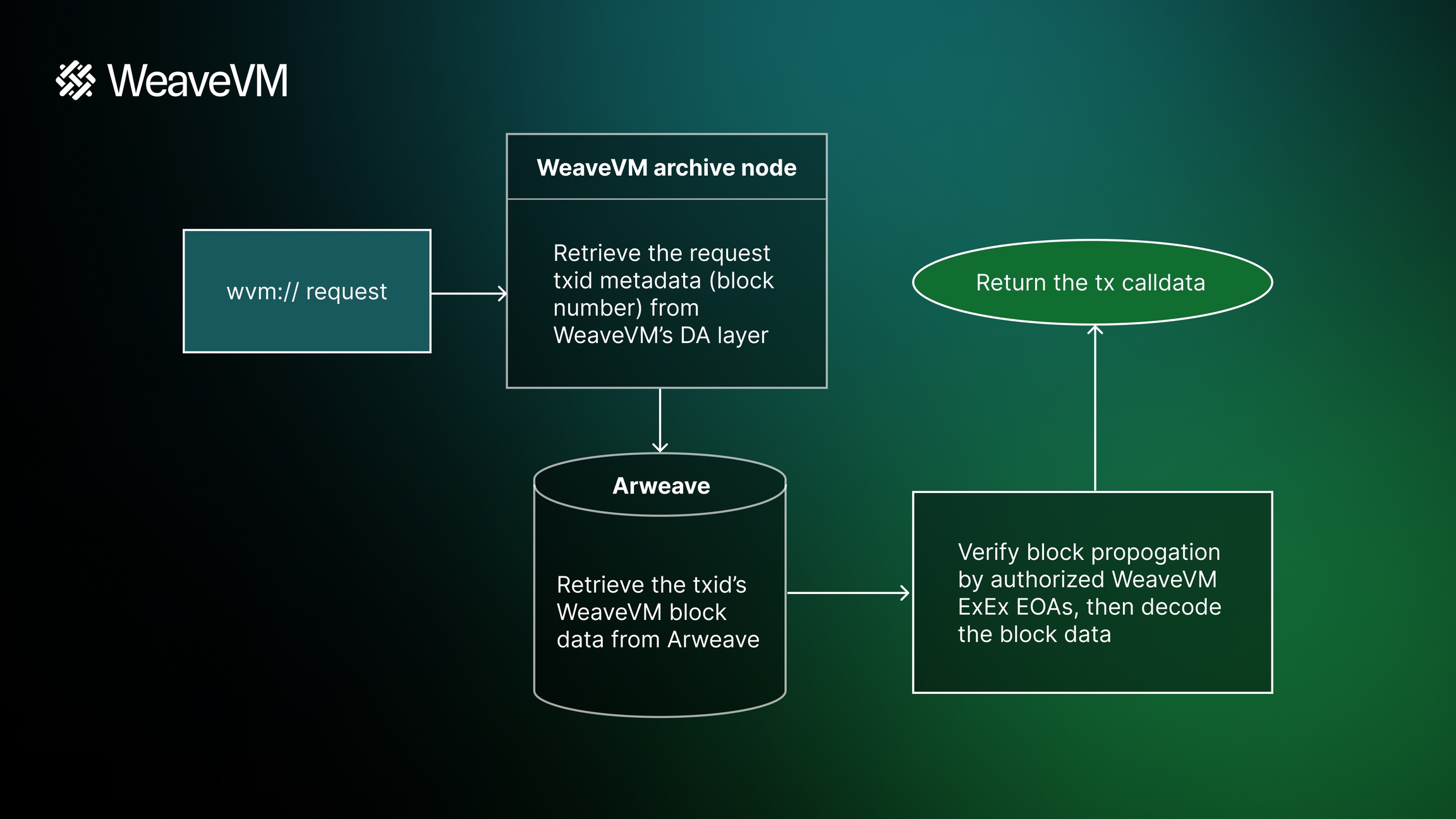

Load Network Data Retriever (load://) is a protocol for retrieving data from the Load Network (EVM). It leverages the LN DA layer and Arweave’s permanent storage to provide trustless access LN transaction data through both networks, whether that’s data which came from LN itself, or L2 data that was settled to LN.

Many chains solve this problem by providing query interfaces to archival nodes or centralized indexers. For Load Network, Arweave is the archival node, and can be queried without special tooling. However, the data LN stores on Arweave is also encoded, serialized and compressed, making it cumbersome to access. The load:// protocol solves this problem by providing an out-of-the-box way to grab and decode Load Network data while also checking it has been DA-verified.

The data retrieval pipeline ensures that when you request data associated with a Load Network transaction, it passes through at least one DA check (currently through LN's self-DA).

It then retrieves the transaction block from Arweave, published by LN ExExes, decodes the block (decompresses Brotli and deserializes Borsh), and scans the archived sealed block transactions within LN to locate the requested transaction ID, ultimately returning the calldata (input) associated with it.

Currently, the load:// gateway server provides two methods: one for general data retrieval and another specifically for transaction data posted by the load-archiver nodes. To retrieve calldata for any transaction on Load Network, you can use the following command:

The second method is specific to load-archiver nodes because it decompresses the calldata and then deserializes its Borsh encoding according to a predefined structure. This is possible because the data encoding of load-archiver data is known to include an additional layer of Borsh-Brotli encoding before the data is settled on LN.

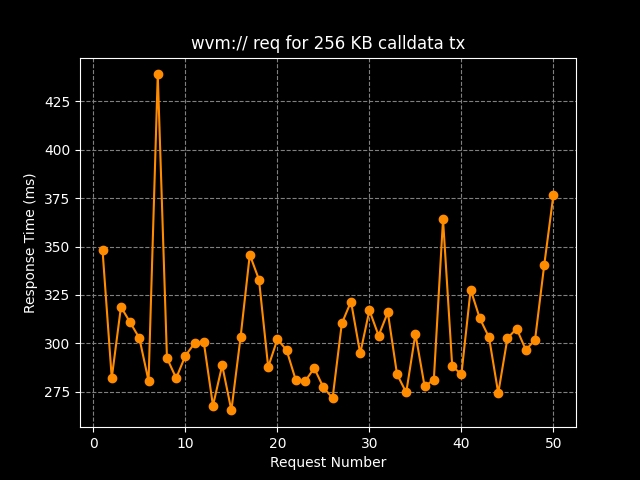

The latency includes the time spent fetching data from LN EVM RPC and the Arweave gateway, as well as the processing time for Brotli decompression, Borsh deserialization, and data validity verification.

About Load Network optimistic & high performance data layer

load0 is Bundler's Large Bundle on steroids -- a cloud-like experience to upload and download data from Load Network using the Bundler's 0xbabe2 transaction format powered with SuperAccount & S3 under the hood.

First, the user sends data to the load0 REST API /upload endpoint -- the data is pushed to load0's S3 bucket and returns an optimistic hash (keccak hash) which allows the users to instantly retrieve the object data from load0.

After being added to the load0 bucket, the object gets added to the orchestrator queue that uploads the optimistic cached objects to Load Network. Using Large Bundle & SuperAccount, the S3 bucket objects get sequentially uploaded to Load and therefore, permanently stored while maintaining very fast uploads and downloads. Object size limit: 1 byte -> 2GB.

Also, to have endpoints similiarity as in bundler.load.rs, you can do:

Returns:

An object data can be accessed via:

optimistic caching: https://load0.network/resolve/{Bundle.optimistic_hash}

from Load Network (once settled): https://bundler.load.rs/v2/resolve/{Bundle.bundle_txid}

Source code:

Learn how to use Load S3 storage layer along LCP's load_acc api keys

First, you have to create a bucket from the cloud.load.network dashboard if the bucket you want to create scoped keys for does not already exist.

After creating the bucket, navigate to the "API Keys" tab and create a new load_acc key with a label, then scope it to the desired bucket.

After creating a bucket and load_acc API key, you can now interact with the Load S3 storage layer via S3-compatible SDKs, such as the official AWS S3 SDK. Here is the S3 client configuration as it should be:

And that's it, that's all you need to start interacting with your HyperBEAM-powered S3 temporary storage!

The first Revm EVM device on HyperBEAM

The @evm1.0 device: an EVM bytecode emulator built on top of Revm (version v22.0.1).

The device not only allows evaluation of bytecode (signed raw transactions) against a given state db, but also supports appchain creation, statefulness, EVM context customization (gas limit, chain id, contract size limit, etc.), and the elimination of the block gas limit by substituting it with a transaction-level gas limit.

This device is experimental, in PoC stage

Live demo at

eval_bytecode() takes 3 inputs, a signed raw transaction (N.B: chain id matters), a JSON-stringified state db and the output state path (here in this device it's in )

device source code:

hb device interface:

nif tests:

ao process example:

The Load Network Gateway Stack: Fast, Reliable Access to Load Network Data

All storage chains have the same issue: even if the data storage is decentralized, retrieval is handled by a centralized gateway. A solution to this problem is just to provide a way for anyone to easily run their own gateway – and if you’re an application building on Load Network, that’s a great way to ensure content is rapidly retrievable from the blockchain.

When – a photo sharing dApp that uses LN bundles for storage – started getting traction, the default LN gateway became a bottleneck for the Relic team. The way data is stored inside bundles (hex-encoded, serialized, compressed) can make it resource-intensive to decode and present media on demand, especially when thousands of users are doing so in parallel.

In response, we developed two new open source gateways: one , and .

The LN Gateway Stack introduces a powerful new way to access data from Load Network bundles, combining high performance with network resilience. At its core, it’s designed to make bundle data instantly accessible while contributing to the overall health and decentralization of the LN.

The first ever temporary, private, payment-gated ANS-104 Dataitems

The x402 protocol is an open framework that enables machine-to-machine payments on the web by standardizing how clients and services exchange value over HTTP. Building on the HTTP 402 "Payment Required" status code, x402 establishes a clear transaction flow where a server responds to resource requests with payment instructions (including amount and recipient), the client provides payment authorization, a payment facilitator verifies and settles the transaction, and the server delivers the requested resource along with payment confirmation.

This protocol allows automated agents, crawlers, and digital services to conduct transactions programmatically without requiring traditional accounts, subscriptions, or API keys. By creating a common language for web-based payments, x402 enables new monetization models such as pay-per-use access, micropayments for AI agents purchasing from multiple merchants, and flexible payment schemes including immediate settlement via stablecoins or deferred settlement through traditional payment rails like credit cards and bank accounts.

Plug Load Network high-throughput DA into any Reth node

Adding a DA layer usually requires base-level changes to a network’s architecture. Typically, DA data is posted either by sending calldata to the L1 or through blobs, with the posting done at the sequencer level or by modifying the rollup node’s code.

This ExEx introduces an emerging, non-traditional DA interface for EVM rollups. No changes are required at the sequencer level, and it’s all handled via the ExEx, which is easy to add to any Reth client in just 80 lines of code.

The gateway stack solves several critical needs in the LN ecosystem:

Rapid data retrieval

Through local caching with SQLite, the gateway dramatically reduces load times (4-5x) for frequently accessed bundled data. No more waiting for remote data fetches – popular content is served instantly from the gateway node.

For relic.bot, this slashed feed loading times from 6-8 seconds to near-instant.

Network health

By making it easy to run your own gateway, the stack promotes a more decentralized network. Each gateway instance contributes to network redundancy, ensuring data remains accessible even if some nodes go offline.

Running your own LN gateway is pretty straightforward. The gateway stack is designed for easy deployment, directly to your server or inside a Docker container.

With Docker, you can have a gateway up and running in minutes:

For rustaceans, rusty-gateway is deployable on a Rust host like shuttle.dev – get the repo here and Shuttle deployment docs here.

Under the hood, the gateway stack features:

SQLite-backed persistent cache

Content-aware caching with automatic MIME type detection

Configurable cache sizes and retention policies

Application-specific cache management

Automatic cache cleanup based on age and size limits

Health monitoring and statistics

The gateway exposes a simple API for accessing bundle data:

GET /bundle/:txHash/:index

This endpoint handles the job of data retrieval, caching, and content-type detection behind the scenes.

The Load Network gateway stack was built in response to problems of scale – great problems to have as a new network gaining traction. LN bundle data is now more accessible, resilient and performant. By running a gateway, you’re not just improving your own access to LN data – you’re contributing to a more robust, decentralized network.

Test the gateways:

curl -X POST "https://load0.network/upload" \

--data-binary "@./video.mp4" \

-H "Content-Type: video/mp4" \

-H "X-Load-Authorization: $YOUR_LCP_AUTH_TOKEN"GET https://load0.network/download/{optimistic_hash}GET https://load0.network/resolve/{optimistic_hash}GET https://load0.network/bundle/optimistic/{op_hash}GET https://load0.network/bundle/load/{bundle_txid}pub struct Bundle {

pub id: u32,

pub optimistic_hash: String,

pub bundle_txid: String,

pub data_size: u32,

pub is_settled: bool,

pub content_type: String

}import { S3Client } from "@aws-sdk/client-s3";

const endpoint = "https://api.load.network/s3"; // LS3 HyperBEAM cluster

const accessKeyId = "load_acc_YOUR_LCP_ACCESS_KEY"; // get yours from cloud.load.network

const secretAccessKey = "";

// Initialize the S3 client

const s3Client = new S3Client({

region: "us-east-1",

endpoint,

credentials: {

accessKeyId,

secretAccessKey,

},

forcePathStyle: true, // required

});#[rustler::nif]

fn eval_bytecode(signed_raw_tx: String, state: String, cout_state_path: String) -> NifResult<String> {

let state_option = if state.is_empty() { None } else { Some(state) };

let evaluated_state: (String, String) = eval(signed_raw_tx, state_option, cout_state_path)?;

Ok(evaluated_state.0)

}

#[rustler::nif]

fn get_appchain_state(chain_id: &str) -> NifResult<String> {

let state = get_state(chain_id);

Ok(state)

}git clone https://github.com/weavevm/bundles-gateway.git

cd bundles-gateway

docker compose up -dThe x402 Foundation is a collaborative initiative being established by Cloudflare and Coinbase with the mission of encouraging widespread adoption of the x402 protocol.

We are proud to be the first team that has worked on the intersection of x402 micropayments protocol and Arweave's ANS-104 data format. We have integrated the x402 protocol in Load's custom HyperBEAM device ([email protected] device), resulting in the first ever expirable, paywalled, privately shareable ANS-104 DataItems.

The x402 micropayments have been integrated on the ANS-104 gateway sidecar level of the s3 device. check out source code.

There are two ways to create an x402 paywalled private expireable ANS-104 DataItem, the first is user friendly via the LCP dashboard, and the second is programmatic yet DIY, let's explore both methods.

x402 has been integrated on LCP, making it possible for LCP users to create x402 paywalled, expirable links for their private objects (ANS-104 DataItems) on Load S3. The process is pretty simple and straightforward: fill in the parameters of the Create Payment Link request (set expiry , payee EOA, and USDC amount) and the dashboard will generate a ready-to-use x402 expirable DataItem URL.

The DIY method means doing what the LCP dashboard abstracts, first you need to create a private LS3 ANS-104 Dataitem and upload it to your LCP bucket (load-s3-agent reference):

After that you have to generate the x402 signed shareable receipt using the HyperBEAM LS3 sidecar:

this curl request will return a base64 string, you use it to share the x402 paywalled ANS-104 DataItem as following: https://402.load.network/$base64_string

The data protocol transactions follow the ANS-104 data item specifications. Each LN precompile transaction is posted on Arweave, after brotli compression, with the following tags:

LN:Precompile

true

Data protocol identifier

Content-Type

application/octet-stream

Arweave data transaction MIME type

LN:Encoding

Brotli

Transaction's data encoding algorithms

LN:Precompile-Address

$value

Load Network Reth Precompiles Address: 5JUE58yemNynRDeQDyVECKbGVCQbnX7unPrBRqCPVn5Z

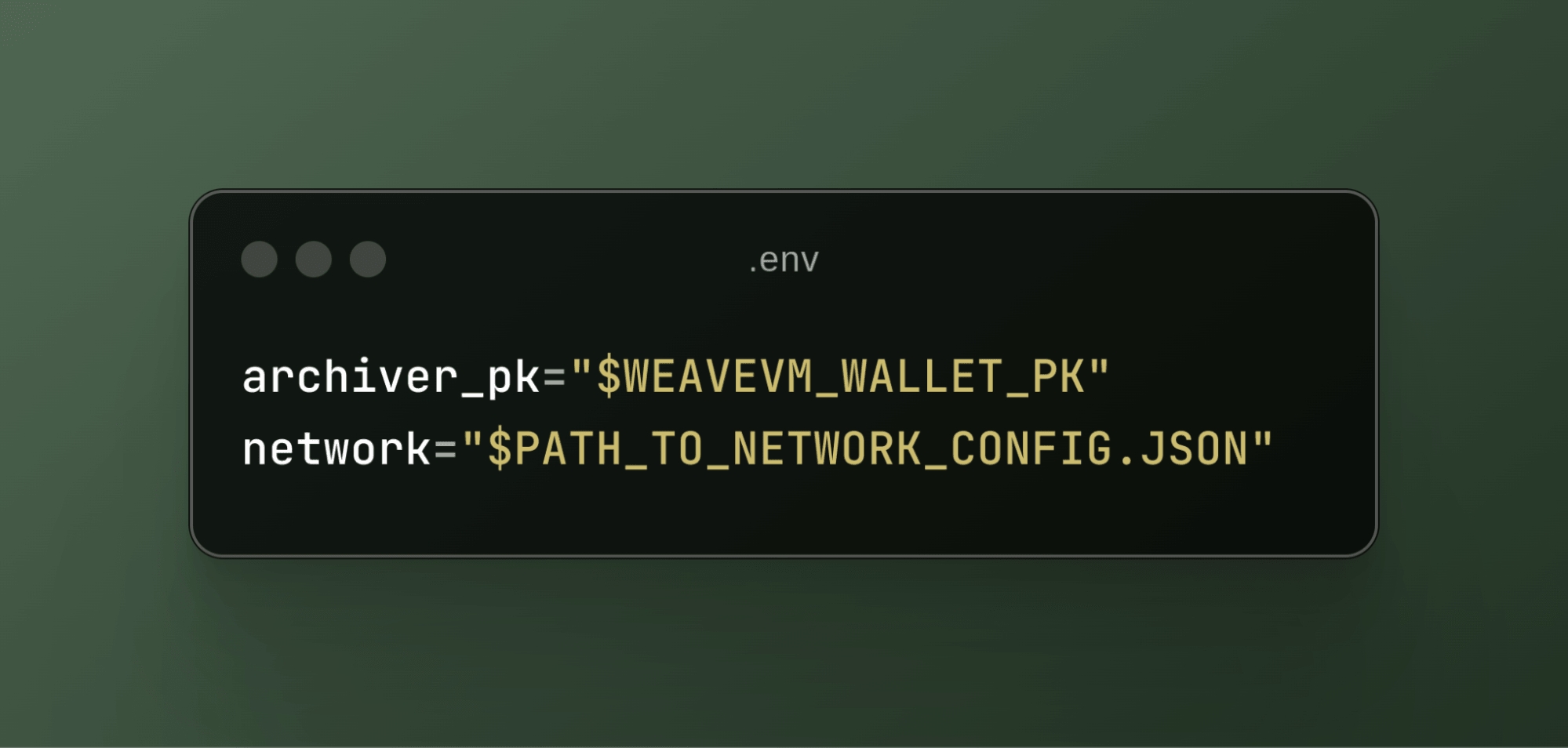

First, you’ll need to add the following environment variables to your Reth instance:

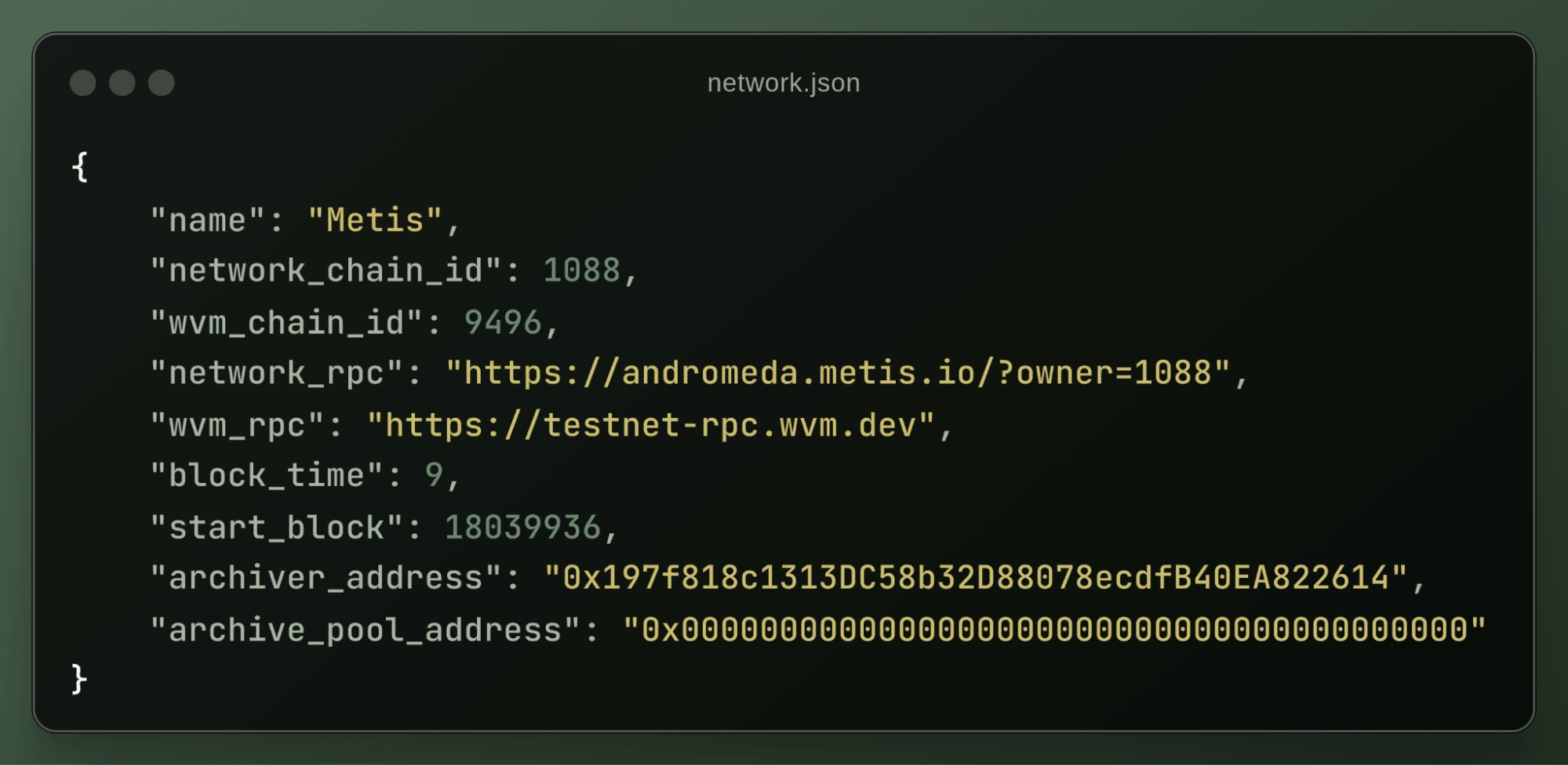

The archiver_pk refers to the private key of the LN wallet, which is used to pay gas fees on the LN for data posting. The network variable points to the path of your network configuration file used for the ExEx. A typical network configuration file looks like this:

For a more detailed setup guide for your network, check out this guide.

Finally, to implement the Load Network DA ExEx in your Reth client, simply import the DA ExEx code into your ExExes directory and it will work off the shelf with your Reth setup. Get the code here.

Explore the first temporal data storage layer on AO HyperBEAM

The Load S3 storage layer is built on top of HyperBEAM as a device, and ao network for data programmability. At its core, the [email protected] device – a HyperBEAM s3 object-storage provider – is the heart of the storage layer.

The HyperBEAM S3 device offers maximum flexibility for HyperBEAM node operators, allowing them to either spin up MinIO clusters in the same location as the HyperBEAM node and rent their available storage, or connect to existing external clusters, offering native integration between hb’s s3 and devs existing storage clusters. For instance, Load’s S3 device is co-located with the MinIO clusters.

To start using the Load S3 temporary storage layer today, checkout the , and the sections.

Load S3’s MinIO cluster, forming the storage layer, runs on 4 nodes with erasure coding enabled. Data is split into data and parity blocks, then striped across all nodes. This allows the system to tolerate the loss of up to two nodes without data loss or service interruption. Unlike full replication, which stores complete copies of each object on multiple nodes, erasure coding provides redundancy with lower storage overhead, ensuring durability while keeping capacity usage efficient.

A four-node configuration also enables automatic data healing. When a failed node comes back online or a new node replaces it, missing blocks are rebuilt from the remaining healthy nodes in real-time, without taking the cluster offline. Object integrity is verified using per-object checksums, and data availability can be asserted using S3 metadata, such as size, timestamp, and ETag – ensuring each object is present, intact, and retrievable.

The Load S3 layer inherits these guarantees by offloading them to a battle-tested distributed object storage system, in this implementation, MinIO. In the future, the Load S3 decentralized network, consisting of multiple S3 HyperBEAM nodes, will have these properties available out of the box, without the need to re-engineer them from scratch.

The [email protected] device has been designed with a built-in data protocol to natively handle ANS-104 DataItems offchain temporary storage. This approach translates our rationale: HyperBEAM s3 nodes can store signed & valid ANS-104 DataItems temporarily, that can be pushed anytime, when needed, to Arweave, while maintaining the DataItem’s provenance and determinism (e.g. ID, signature, timestamp, etc). Learn more about data provenance, lineage and governance here.

For a direct low level ANS-104 DataItem data streaming from LS3, use the HyperBEAM gateway instead — access the offchain dataitem under:

Given the S3 device’s native integration with objects serialized and stored as ANS-104 DataItems, we considered DataItem accessibility, such as resolving via Arweave gateways.

Being an S3 device, we were able to benefit from HyperBEAM’s modular architecture, so we extended HyperBEAM’s gateway: we built the module and extended the by integrating the hb_gateway_s3 store module as a fallback extension to the Arweave’s GraphQL API.

Additionally, hb_opts.erl Stores orders have been modified to add s3 offchain dataitems retrieval as a fallback after HyperBEAM’s cache module, Arweave gateway then S3 (offchain) – offchain DataItems should have the Data-Protocol : Load-S3 tag to be recognized by the subindex.

Building these extension components, a hb node running the ~ device, benefit from the Hybrid Gateway that can resolve both onchain and offchain dataitems.

For a higher level trust assumption, upload data to LS3 using the Turbo-compliant which comes with a built-in system of signed receipts.

In the current release, Load S3 is a storage layer consisting of a single centralized yet verifiable storage provider (HyperBEAM node running the [email protected] device components).

This early-stage testing layer offers similar trust assumptions offered by other centralized services in the Arweave ecosystem such as ANS-104 Bundlers. Load S3’s gradual evolution from a layer to decentralized network built on top of ao network will remove the centralized and trust-based components, one by one, to reach a trustless, verifiable and incentivized temporal data storage network.

Besides the Hybrid Gateway, nodes like s3-node-1 support a highly optimized low-level dataitems streaming leveraging precomputed dataitem data start-byte offset and range streaming from the S3 cluster directly.

The sidecar bypasses the technical need to deserialize the dataitem in order to extract useful information such as tags and dataitem's data, reducing dramatically the latency for resolving.

On s3-node-1 — the sidecar is available under https://gateway.s3-node-1.load.network/resolve/:offchain-dataitemid

And to download the full LS3 DataItem binary (the .ans104 file), you can use the following endpoint: https://gateway.s3-node-1.load.network/binary/:offchain-dataitemid

Load’s HyperBEAM node running the [email protected] device is available the following endpoint: – developers looking to use the HyperBEAM node as S3 endpoint, can use the official S3 SDKs as long as the used S3 commands are supported by [email protected] - (1:1 parity)

To learn how to start using Load S3 today, check out the following

The Kernel Execution Machine device

The kernel-em NIF (kernel execution machine - [email protected] device) is a HyperBEAM Rust device built on top of wgpu to offer a general GPU-instructions compute execution machine for .wgsl functions (shaders, kernels).

With wgpu being a cross-platform GPU graphics API, hyperbeam node operators can add the KEM device to offer a compute platform for KEM functions. And with the ability to be called from within an ao process through ao.resolve ([email protected] device), KEM functions offer great flexibility to run as GPU compute sidecars alongside ao processes.

This device is experimental, in PoC stage

KEM function source code is deployed on Arweave (example, double integer: ), and the source code TXID is used as the KEM function ID.

A KEM function execution takes 3 parameters: function ID, binary input data, and output size hint ratio (e.g., 2 means the output is expected to be no more than 2x the size of the input).

The KEM takes the input, retrieves the kernel source code from Arweave, and executes the GPU instructions on the hyperbeam node operator's hardware against the given input, then returns the byte results.

As the kernel execution machine (KEM) is designed to have I/O as bytes, and having the shader entrypoint standardized as main, writing a kernel function should have the function's entrypoint named main, the shader's type to be @compute, and the function's input/output should be in bytes; here is an example of skeleton function:

Uniform parameters have been introduced as well, allowing you to pass configuration data and constants to your compute shaders. Uniforms are read-only data that remains constant across all invocations of the shader.

Here is an example of a skeleton function with uniform parameters:

Using the image glitcher kernel function -

device source code:

hb device interface:

nif tests:

ao process example:

About LN-ExEx Data Protocol on Arweave

The LN-ExEx data protocol on Arweave is responsible for archiving Load Network's full block data, which is posted to Arweave using the Arweave Data Uploader Execution Extension (ExEx).

After the rebrand from WeaveVM to Load Network, all the data protocol tags have changed the "*WeaveVM*" onchain term (Arweave tag) to "*LN*"

The data protocol transactions follow the ANS-104 data item specifications. Each Load Network block is posted on Arweave, after borsh-brotli encoding, with the following tags:

Reth ExEx Archiver Address:

Arweave-ExEx-Backfill Address:

Connect any EVM network to Load Network

Load Network Archiver is an ETL archive pipeline for EVM networks. It's the simplest way to interface with LN's permanent data feature without smart contract redeployments.

LN Archiver is the ideal choice if you want to:

Interface with LN's permanent data settlement and high-throughput DA

Maintain your current data settlement or DA architecture

Have an interface with LN without rollup smart contract redeployments

Avoid codebase refactoring

Run An Instance

To run your own node instance of the load-archiver tool, check out the detailed setup guide on github:

Guidance on How To Deploy OP-Stack Rollups on Load Network

The is a generalizable framework spawned out of Optimism’s efforts to scale the Ethereum L1. It provides the tools for launching a production-quality Optimistic Rollup blockchain with a focus on modularity. Layers like the sequencer, data availability, and execution environment can be swapped out to create novel L2 setups.

The goal of optimistic rollups is to increase L1 transaction throughput while reducing transaction costs. For example, when Optimism users sign a transaction and pay the gas fee in ETH, the transaction is first stored in a private mempool before being executed by the sequencer. The sequencer generates blocks of executed transactions every two seconds and periodically batches them as call data submitted to Ethereum. The “optimistic” part comes from assuming transactions are valid unless proven otherwise.

In the case of Laod Network, we have modified OP Stack components to use LN as the data availability and settlement layer for L2s deployed using this architecture.

curl -X POST https://load-s3-agent.load.network/upload/private \

-H "Authorization: Bearer $load_acc_api_key" \

-H "signed: true" \

-H "bucket_name: $bucket_name" \

-H "x-dataitem-name: $dataitem_name" \

-H "x-folder-name: $folder_name" \

-F "[email protected]" \

-F "content_type=application/octet-stream"curl -X POST https://gateway.s3-node-1.load.network/sign/402 \

-H "Authorization: Bearer $CONTACT_US" \

-H "x-bucket-name: $bucket_name" \

-H "x-load-acc: $load_acc_api_key" \

-H "x-dataitem-key: $dataitem_key.ans104" \

-H "x-402-address: $payee_eoa" \

-H "x-402-amount: $usdc_amount" \

-H "x-expires-minutes: $set_expiry"curl -X GET https://gateway.load.network/calldata/$LN_TXIDcurl -X GET https://gateway.load.network/war-calldata/$LN_TXIDThe decimal precompile number (e.g. 0x17 have the Tag Value of 23)

We’ve built on top of the Optimism Monorepo to enable the deployment of optimistic rollups using LN as the L1. The key difference between deploying OP rollups on Load Network versus Ethereum is that when you send data batches to LN, your rollup data is also permanently archived on Arweave via to LN’s Execution Extensions (ExExes).

As a result, OP Stack rollups using LN for data settlement and data availability (DA) will benefit from the cost-effective, permanent data storage offered by Load Network and Arweave. Rollups deployed on LN use the native network gas token (tLOAD on Alphanet), similar to how ETH is used for OP rollups on Ethereum.

We’ve released a detailed technical guide on GitHub for developers looking to deploy OP rollups on Load Network. Check it out here and the LN’s fork of Optimism Monorepo here.

Block-Number

$value

Load Network block number

Block-Hash

$value

Load Network block hash

Client-Version

$value

Load Network Reth client version

Network

Alphanet vx.x.x

Load Network Alphanet semver

LN:Backfill

$value

Boolean, if the data has been posted by a backfiller (true) or archiver (false or not existing data)

Protocol

LN-ExEx

Data protocol identifier

ExEx-Type

Arweave-Data-Uploader

The Load Network ExEx type

Content-Type

application/octet-stream

Arweave data transaction MIME type

LN:Encoding

Borsh-Brotli

Transaction's data encoding algorithms

fn execute_kernel(

kernel_id: String,

input_data: rustler::Binary,

output_size_hint: u64,

) -> NifResult<Vec<u8>> {

let kernel_src = retrieve_kernel_src(&kernel_id).unwrap();

let kem = pollster::block_on(KernelExecutor::new());

let result = kem.execute_kernel_default(&kernel_src, input_data.as_slice(), Some(output_size_hint));

Ok(result)

}// SPDX-License-Identifier: GPL-3.0

// input as u32 array

@group(0) @binding(0)

var<storage, read> input_bytes: array<u32>;

// output as u32 array

@group(0) @binding(1)

var<storage, read_write> output_bytes: array<u32>;

// a work group of 256 threads

@compute @workgroup_size(256)

// main compute kernel entry point

fn main(@builtin(global_invocation_id) global_id: vec3<u32>) {

}// SPDX-License-Identifier: GPL-3.0

// input as u32 array

@group(0) @binding(0)

var<storage, read> input_bytes: array<u32>;

// output as u32 array

@group(0) @binding(1)

var<storage, read_write> output_bytes: array<u32>;

// uniform parameters for configuration

@group(0) @binding(2)

var<uniform> params: vec2<u32>; // example: param1, param2

// a work group of 256 threads

@compute @workgroup_size(256)

// main compute kernel entry point

fn main(@builtin(global_invocation_id) global_id: vec3<u32>) {

// Access uniform parameters

let param1 = i32(params.x);

let param2 = i32(params.y);

// your kernel logic here

}S3 Node 1 (current testnet)

untouched ao compute (canonical)

Blazingly fast ANS-104 dataitems streaming from S3 (sidecar)

Hybrid Gateway

Powers LCP, Turbo upload service and load-s3-agent

x402 integration

supports private, expirable shareable ANS-104 DataItems (S3 objects)

S3 Node 0 (deprecated)

ao compute with offchain dataitems

Hybrid Gateway

Serverless quantum functions runtime (simulation)

The quantum_runtime_nif is the foundation of the [email protected] device: a quantum computing runtime built on top of roqoqo simulation framework. This hyperbeam device enables serverless quantum function execution, positioning hyperbeam nodes running this device as providers of serverless functions compute.

The device supports quantum circuit execution, measurement-based quantum computation, and provides a registry of pre-built quantum functions including superposition states, quantum random number generation, and quantum teleportation protocols.

This device is currently simulation-based using roqoqo-quest backend - for educational purposes only

Quantum computing make use of quantum mechanical phenomena such as superposition and entanglement to process information in fundamentally different ways than classical computers.

Unlike classical bits that exist in definite states (0 or 1), quantum bits (qubits) can exist in superposition of both states simultaneously, enabling parallel computation across multiple possibilities.

The [email protected] device, as per its current implementation, provides a serverless quantum function execution environment. It uses the roqoqo simulation backend for development and testing, but can be adapted to real quantum computation using services like or other quantum cloud providers such as IBM Quantum Platform, with minimal device code changes.

The device supports quantum circuits with up to 32 qubits and provides a registry of whitelisted quantum functions that can be executed through HTTP calls or via ao messaging.

superposition: creates quantum superposition state on a single qubit

quantum_rng: quantum (pseuo)random number generator using multiple qubits

bell_state: creates entangled Bell states between qubits

quantum_teleportation: implements quantum teleportation protocol

The compute() function takes 3 inputs: the number of qubits to initialize, a function ID from the serverless registry, and a list of qubit indices to measure. It returns a HashMap containing the measurement results.

Generate Quantum Random Numbers

hb device interface:

nif interface:

quantum functions registry:

runtime core:

Reconstruction an EVM network using using its load-archiver node instance

The World State Trie, also known as the Global State Trie, serves as a cornerstone data structure in Ethereum and other EVM networks. Think of it as a dynamic snapshot that captures the current state of the entire network at any given moment. This sophisticated structure maintains a crucial mapping between account addresses (both externally owned accounts and smart contracts) and their corresponding states.

Each account state in the World State Trie contains several essential pieces of information:

Current balance of the account

Transaction nonce (tracking the number of transactions sent from this account)

Smart contract code (for contract accounts)

Hash of the associated storage trie (linking to the account’s persistent storage)

This structure effectively represents the current status of all assets and relevant information on the EVM network. Each new block contains a reference to the current global state, enabling network nodes to efficiently verify information and validate transactions.

An important distinction exists between the World State Trie database and the Account Storage Trie database. While the World State Trie database maintains immutability and reflects the network’s global state, the Account Storage Trie database remains mutable with each block. This mutability is necessary because transaction execution within each block can modify the values stored in accounts, reflecting changes in account states as the blockchain progresses.

The core focus of this article is demonstrating how Load Network Archivers’ data lakes can be leveraged to reconstruct an EVM network’s World State. We’ve developed a proof-of-concept library in Rust that showcases this capability using a customized Revm wrapper. This library abstracts the complexity of state reconstruction into a simple interface that requires just 10 lines of code to implement.

Here’s how to reconstruct a network’s state using our library:

The reconstruction process follows a straightforward workflow:

The library connects to the specified Load Network Archive network

Historical ledger data is retrieved from the Load Network Archiver data lakes

Retrieved blocks are processed through our custom minimal EVM execution machine

The EVM StateManager applies the blocks sequentially, updating the state accordingly

This proof-of-concept implementation is available on GitHub:

has evolved beyond its foundation as a decentralized archive node. This proof of concept demonstrates how our comprehensive data storage enables full EVM network state reconstruction - a capability that opens new possibilities for network analysis, debugging, and state verification.

We built this PoC to showcase what’s possible when you combine permanent storage with proper EVM state handling. Whether you’re analyzing historical network states, debugging complex transactions, or building new tools for chain analysis, the groundwork is now laid.

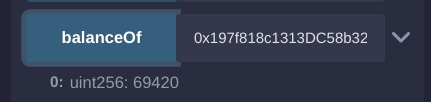

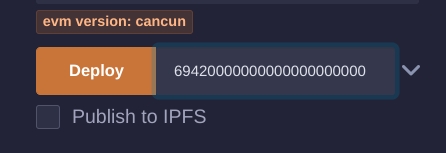

Tutoral on how to deploy an ERC20 on Load Network

Before deploying, make sure the Load Network network is configured in your MetaMask wallet. Check the Network Configurations.

For this example, we will use the ERC20 token template provided by the smart contract library.

Now that you have your contract source code ready, compile the contract and hit deploy with an initial supply.

After deploying the contract successfully, check your EOA balance!

The first ao token x402 facilitator

Thjs was a demo for educational purposes only, for a production ready x402 facilitator on ao, checkout the hyper-x402 section

is an fork with custom ao network support, that works alongside the rest of the supported networks (EVMs, Solana, Avalanche, Sei, etc), meaning you can not only run the facilitator with those network, but also with ao tokens support.

The hyper-x402 ao facilitator is hosted under hyper-x402.load.network (supports base & ao) and the best example to showcase it, is via the axum example

This example repository comes with a tailored axum example that works with the ao network ($AO as payment token). the changes made to main.rs are:

fallback to our hosted facilitator if the ENV doesn't set the local facilitator (running at port 8080)

creates a PriceBuilderTag set to pay the AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA address:

it's also possible to define a token struct instance on ao without being tied to $AO as payment token (default instance), by using the by_ao_network function, at the moment only AO, ARIO and USDA are supported

the route /protected-route is protected with a cost of 0.000000000001 $AO

it's required to have a wallet JWK saved in the ./wallet.json file location (gitignored - use burner!!) in order to complete the test flow (required balance >= 0.000000000001 AO)

In order to GET the /protected-route and pay to access its gated content, you need to:

run the axum server binaries: cargo run --bin x402-axum-example

run the script that automates accessing the protected endpoint and handles the payment creation and submission: cargo run --bin ao_payment_helper

Expected result:

onchain proof: https://www.ao.link/#/message/_zrmkURCLrHOKWzsErmFTEdXzcUEur1iWGErepju3w0

The [email protected] device is the first x402-compliant facilitator implemented with native ao token support, built as a HyperBEAM device (NIF). This facilitator's API follows the spec used in x402-rs.

N.B: The

[email protected]is an early WIP facilitator with partial x402-standard API compatibility. Promising work is being done, but treat it as a PoC for now - endpoint: https://x402-node-1.load.network/[email protected]/supported

Following the x402 hype mid-October 2025 and its adoption within the ai agents stack, we asked ourselves: is there any better compute platform for ai agents than ao? Unbiasedly speaking, no. ao's alien compute enables functionalities like onchain verifiable LLMs (already live on the network), API-keyless autonomous trading agents, permissionless access to Arweave's datalake (WeaveDrive), etc.

So following that hype, we thought, if a network should be supported in the x402 stack, it's the ao network (and its token standard). Additionally, the encapsulation of the [email protected] device in the HyperBEAM OS exposes the facilitator natively to the ao canonical stack. To put it shortly: this device can be integrated into an existing EVM/SOL/* x402 facilitator, adding ao support (maintaining the API schema compatibility) AND offering additional routes for trustless compute infra for ai agents.

Compared to EVM-based facilitators, this HyperBEAM device offers:

~270ms total latency for the complete request lifecycle

native payment history storage via ao's Arweave settlement (GraphQL, state lookup)

potential native route to offer ao's compute alongside the payments railway through a single facilitator

partial-compatibility with the x402 facilitator API standard

micropayments support with near-zero gas fees

no reliance on API keys to function (no JSON-RPC API keys needed)

support for AR/ETH/SOL ANS-104 signatures

x402-rs API schema minimal compatibility

done

Client Library

done

ao tokens support

done

payments storage

done (native feature via ao)

server middleware

provide ready-to-use integration for Rust web frameworks such as axum and tower (also /settle /verify routes) - wip

integration in an x402-rs fork

routing ao requests to [email protected] from an EVM/SOL facilitator - wip

On HyperBEAM’s [email protected] device, the client’s X-Payment` header is an AO message, signed by the user serialised as base64 string but not posted to Arweave – technically speaking, a signed ANS-104 dataitem that follows the ao protocol.

The HTTP request received by the dev_x402_facilitator.erl module is handed to the Rust NIF (x402), where the NIF reconstructs and verifies the dataitem integrity (payment token, transfer amount, Recipient, ao protocol tags, etc), then posts it to the signed dataitem to Arweave and make AO scheduler aware of the message (payment settlement); on success, the erlang device’s counterpart returns the ao message ID along with the unlocked payload data, the whole process takes under a 300ms with near-zero fees, beating the EVM counterpart on speed and price. For an in-practice example, check out the complete flow test here and this onchain proof

On the other hand, EVM facilitators (e.g. x402-rs) have the same HTTP schema but the payment is an ERC‑3009 transferWithAuthorization: the client signs EIP‑712 typed data, the facilitator’s server parses the payload, talks to the target target chain via JSON-RPC (in most cases requires API keys to not get rate limited), and spends gas to execute the transfer on-chain before responding.

#[rustler::nif]

fn hello() -> NifResult<String> {

Ok("Hello world!".to_string())

}

#[rustler::nif(schedule = "DirtyCpu")]

fn compute(

num_qubits: usize,

function_id: String,

measurements: Vec<usize>,

) -> NifResult<HashMap<String, f64>> {

let runtime = Runtime::new(num_qubits);

match runtime.execute_serverless(function_id, measurements) {

Ok(result) => Ok(result),

Err(_) => Err(rustler::Error::Term(Box::new("execution failed"))),

}

}curl -X POST "https://hb.load.rs/[email protected]/compute" \

-H "Content-Type: application/json" \

-d '{

"function_id": "quantum_rng",

"num_qubits": 4,

"measurements": [0, 1, 2, 3]

}' let ao_token = USDCDeployment::by_network(Network::Ao).pay_to(MixedAddress::Offchain(

"AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA".to_string(),

)); let usda_token = USDCDeployment::by_ao_network("USDA").pay_to(MixedAddress::Offchain(

"AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA".to_string(),

));status=200 OK body=This is a VIP content!The final result is a complete reconstruction of the network’s World State

Load Network provides a gateway for Arweave's permanent with its own (LN) high data throughput of the permanently stored data into .

Current maximum encoded blob size is 8 MB (8_388_608 bytes).

Laod Network currently operating in public testnet (Alphanet) - not recommended to use it in production environment.

Understand how to boot basic Dymension RollApp and how to configure it.

Obtain test tLOAD tokens through our faucet for testing purposes.

Monitor your transactions using the Load Network explorer.

How it works

You may choose to use Load Network as a DataAvailability layer of your RollApp. We assume that you know how to boot and configure basics of your dymint RollApp. As an example you may use

https://github.com/dymensionxyz/rollapp-evm repository.

Example uses "mock" DA client. To use Load Network you should simply set next environment variable

before config generation step using init.sh

export DA_CLIENT="weavevm" # This is the key change

export WVM_PRIV_KEY="your_hex_string_wvm_priv_key_without_0x_prefix"

init.sh will generate basic configuration for da_config.json in dymint.toml which should look like.

da_config = '{"endpoint":"https://alphanet.load.network","chain_id":9496,"timeout":60000000000,"private_key_hex":"your_hex_string_load_priv_key_without_0x_prefix"}'

In this example we use PRIVATE_KEY of your LN address. It's not the most secure way to handle transaction signing and that's why we also provide an ability to use web3signer as a signing method. To enable web3signer you will need to change init.sh script and add correspondent fields or change da_config.json in dymint.toml directly.

e.g

da_config = '{"endpoint":"https://alphanet.load.network","chain_id":9496,"timeout":"60000000000","web3_signer_endpoint":"http://localhost:9000"}'

and to enable tls next fields should be add to the json file:

web3_signer_tls_cert_file

web3_signer_tls_key_file

web3_signer_tls_ca_cert_file

Web3 signer

Web3Signer is a tool by Consensys which allows remote signing.

Using a remote signer comes with risks, please read the web3signer docs. However this is a recommended way to sign transactions for enterprise users and production environments. Web3Signer is not maintained by Load Network team. Example of the most simple local web3signer deployment (for testing purposes): https://github.com/allnil/web3signer_test_deploy Example of used configuration:

in rollap-evm log you will eventually see something like this:

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

/// @title Useless Testing Token

/// @notice Just a testing shitcoin

/// @dev SupLoad gmgm

/// @author pepe frog

import "@openzeppelin/contracts/token/ERC20/ERC20.sol";

contract WeaveGM is ERC20 {

constructor(uint256 initialSupply) ERC20("supLoad", "LOAD") {

_mint(msg.sender, initialSupply);

}

}About Load Cloud Platform

Uploading data onchain shouldn’t be any more difficult than using Google Drive. The reason tools like Google Drive are popular is because they just work and are cheap/free. Their hidden downsides? You don’t own your data, it’s not permanent, and – especially for blockchain projects – it’s not useful for application developers.

Users just want to put their data somewhere and forget about the upkeep. Developers just want a permanent reference to their data that resolves in any environment. Whichever you are, we built cloud.load.network for you.

The Load Cloud is an all-in-one tool to interact with various Load Network storage interfaces and pipelines: one UI, one API key, various integrations, with web2 UX.

It has been a few months since the . Since the initial alpha, we have been working towards a more complete dashboard for the onchain data center.

This builds towards our vision of the – meeting developers where they are, with familiar devex and 1:1 parallels with the web2 tools they already use. The decentralized compute layer is in place via Load’s EVM/AO layers, and storage via the HyperBEAM-powered S3 device.

The V2 release of the Load Cloud Platform (LCP) introduces several, highly-requested features:

private ANS-104 DataItems

DataItems as first-class s3 objects

access controlled DataItems (s3 objects)

[email protected] device upgrades

V2 introduces the concept of Load accounts and API keys. A unified auth layer finally enables us to provision scoped access to Load’s HyperBEAM S3 layer and build access control for data.

Under the hood, Load Cloud email login uses to create an EVM wallet. This wallet’s keys have access to the Load authentication API and generate keys in the dashboard that can read and write from S3.

This approach enables API access to offchain services with a wallet address as the primary identity, leaving the system open in the future for offchain components to handle things like payments and native integration with onchain compute.

Private data and access control are 2 of the most requested features we ever got since we started working with the onchain data center roadmap.

How can I access my data without necessarily encrypting it and storing the encrypted data onchain? How can I gate access to my data?

Private ANS-104 Dataitems are possible today through the introduction of private buckets along load_acc gated JWT tokens. Before private dataitems, all of Load S3 dataitems were public, and stored according to the `offchain-dataitems` data protocol. However, as of today, any LCP user can:

Create a private bucket and select which LCP users (load_acc api keys) can access the bucket’s objects

Upload data to the private bucket and have it stored as ANS-104 Dataitems

Generate expirable pre-signed URLs for the private data sharing

Control the access to the private bucket’s dataitems by adding/removing load_acc API keys

Therefore, LCP & Load S3 users can now store DataItems privately in their private S3 buckets and control the access to the s3 objects, with zero cryptography (encryption/decryption) overhead to keep the data private as if it was pushed to Arweave. Access to Load S3 dataitems can be controlled via signed auto-expiring sharing URLs, or by making the data permanently public on Arweave via Load’s Turbo integration.

As mentioned above, LCP V2 allows its users to control the access over the privately stored offchain DataItems. The access is gated by the uploader’s master load_acc api key - in the next patch release, we will allow the users to add other LCP users via their registered email address.

With this upgrade, private offchain ANS-104 DataItems are now the first-class data format for S3 objects in Load S3’s LCP.

In order to make the private offchain DataItems accessible to their rightful whitelisted users, we had to upgrade the HyperBEAM device’s sidecar and make it possible to:

Generate presigned URLs (JWT-gated) for private dataitems given private bucket name, dataitem s3 key, a user’s load_acc and expiry timestamp. The device then generates a presigned URL, by validating the requester’s correct ownership of the bucket and the dataitem.

Data streaming of the private dataitem’s data field directly from the S3 cluster, without deserializing the ANS-104 dataitem, to the user’s browser, after JWT validation.

These features have been released under s3_nif v0.3.1 which are live under s3-node-1.load.network. To check the s3 device sidecar’s upgrades, visit the source code .

The Load S3 HTTP API and ANS-104 data orchestrator is now in v4 with several necessary features for the functionality of the LCP backend, and Load S3 clients. One of the most tangent features to this blog post, in the agent’s v4 release, is the /upload/private HTTP POST method that lets LCP users to programmatically push raw data to their LCP’s private bucket, auth’d with the load_acc API keys, and with the final data format as ANS-104 dataitem, that’s prepared and signed by the agent’s wallet.

For more examples, check out the

Today you can use the LCP platform to create buckets, folders and temporarily store data privately in object-storage format. The LCP uses Load's S3 HyperBEAM data storage layer for hotcache storage.

Permanent EigenDA blobs

EigenDA proxy: repository

LN-EigenDA wraps the , exposing endpoints for interacting with the EigenDA disperser in conformance to the , and adding disperser verification logic. This simplifies integrating EigenDA into various rollup frameworks by minimizing the footprint of changes needed within their respective services.

It's a Load Network integration as a secondary backend of eigenda-proxy. In this scope, Load Network provides an EVM gateway/interface for EigenDA blobs on Arweave's Permaweb, removing the need for trust assumptions and relying on centralized third party services to sync historical data and provides a "pay once, save forever" data storage feature for EigenDA blobs.

Current maximum encoded blob size is 8 MiB (8_388_608 bytes).

Load Network currently operating in public testnet (Alphanet) - not recommended to use it in production environment.

Review the configuration parameters table and .env file settings for the Holesky network.

Obtain test tLOAD tokens through our for testing purposes.

Monitor your transactions using the

Please double check .env file values you start eigenda-proxy binary with env vars. They may conflict with flags.

Start eigenda proxy with LN private key:

POST command:

GET command:

is a tool by Consensys which allows remote signing.

Using a remote signer comes with risks, please read the web3signer docs. However this is a recommended way to sign transactions for enterprise users and production environments. Web3Signer is not maintained by Load Network team. Example of the most simple local web3signer deployment (for testing purposes):

start eigenda proxy with signer:

start web3signer tls:

use evm_state_reconstructing::utils::core::evm_exec::StateReconstructor;

use evm_state_reconstructing::utils::core::networks::Networks;

use evm_state_reconstructing::utils::core::reconstruct::reconstruct_network;

use anyhow::Error;

async fn reconstruct_state() -> Result<StateReconstructor, Error> {

let network: Networks = Networks::metis();

let state: StateReconstructor = reconstruct_network(network).await?;

Ok(state)

} # Set environment variables

export DA_CLIENT="weavevm" # This is the key change

export WVM_PRIV_KEY="your_hex_string_wvm_priv_key_without_0x_prefix"

export ROLLAPP_CHAIN_ID="rollappevm_1234-1"

export KEY_NAME_ROLLAPP="rol-user"

export BASE_DENOM="arax"

export MONIKER="$ROLLAPP_CHAIN_ID-sequencer"

export ROLLAPP_HOME_DIR="$HOME/.rollapp_evm"

export SETTLEMENT_LAYER="mock"

# Initialize and start

make install BECH32_PREFIX=$BECH32_PREFIX

export EXECUTABLE="rollapp-evm"

$EXECUTABLE config keyring-backend test

sh scripts/init.sh

# Verify dymint.toml configuration

cat $ROLLAPP_HOME_DIR/config/dymint.toml | grep -A 5 "da_config"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "max_idle_time" -v "2s"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "max_proof_time" -v "1s"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "batch_submit_time" -v "30s"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "p2p_listen_address" -v "/ip4/0.0.0.0/tcp/36656"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "settlement_layer" -v "mock"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "node_address" -v "http://localhost:36657"

dasel put -f "${ROLLAPP_HOME_DIR}"/config/dymint.toml "settlement_node_address" -v "http://127.0.0.1:36657"

# Start the rollapp

$EXECUTABLE start --log_level=debug \

--rpc.laddr="tcp://127.0.0.1:36657" \

--p2p.laddr="tcp://0.0.0.0:36656" \

--proxy_app="tcp://127.0.0.1:36658"

INFO[0000] weaveVM: successfully sent transaction[tx hash 0x8a7a7f965019cf9d2cc5a3d01ee99d56ccd38977edc636cc0bbd0af5d2383d2a] module=weavevm

INFO[0000] wvm tx hash[hash 0x8a7a7f965019cf9d2cc5a3d01ee99d56ccd38977edc636cc0bbd0af5d2383d2a] module=weavevm

DEBU[0000] waiting for receipt[txHash 0x8a7a7f965019cf9d2cc5a3d01ee99d56ccd38977edc636cc0bbd0af5d2383d2a attempt 0 error get receipt failed: failed to get transaction receipt: not found] module=weavevm

INFO[0002] Block created.[height 35 num_tx 0 size 786] module=block_manager

DEBU[0002] Applying block[height 35 source produced] module=block_manager

DEBU[0002] block-sync advertise block[error failed to find any peer in table] module=p2p

INFO[0002] MINUTE EPOCH 6[] module=x/epochs

INFO[0002] Epoch Start Time: 2025-01-13 09:21:03.239539 +0000 UTC[] module=x/epochs

INFO[0002] commit synced[commit 436F6D6D697449447B5B3130342038203131302032303620352031323920393020343520313633203933203235322031352031343320333920313538203131342035382035352031352038322038203939203132392032333520313731203230382031392032343320313932203139203233352036355D3A32337D]

DEBU[0002] snapshot is skipped[height 35]

INFO[0002] Gossipping block[height 35] module=block_manager

DEBU[0002] Gossiping block.[len 792] module=p2p

DEBU[0002] indexed block[height 35] module=txindex

DEBU[0002] indexed block txs[height 35 num_txs 0] module=txindex

INFO[0002] Produced empty block.[] module=block_manager

DEBU[0002] Added bytes produced to bytes pending submission counter.[bytes added 786 pending 15719] module=block_manager